目的

从内嵌到应用的SDK模式转成istio servicemesh,再到最新提出来的proxyless可谓是发展太快了。刚开始我只是围绕着服务注册和发现是怎么转变来展开研究,但是发现这个话题有点大,还是得一步步来:

-

sidecar如何接管流量? -

如果不考虑现有的微服务体系,注册和发现怎么实现,有几种方式? -

结合现有的微服务体系,注册和发现该如何融合?

先一步步研究吧,抓着这个主方向不断地探寻,肯定有所收获。

今天和大家分享第一个,sidecar如何接管流量

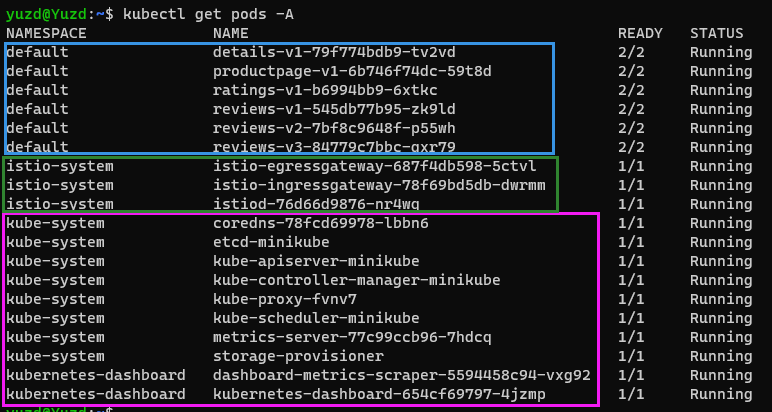

整个istio的bookinfo环境搭建如下

按照官网

-

首先安装k8s(我用minikube) -

再安装istio -

开启默认namespace:default的sidecar注入然后跑samples的bookinfo

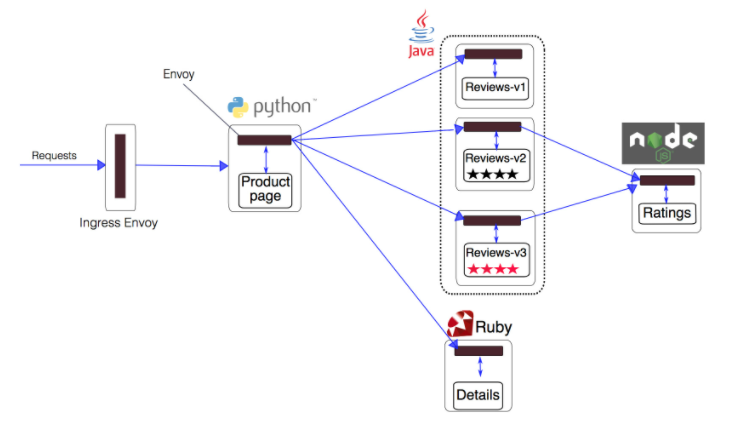

下图是使用 Istio 管理的 bookinfo 示例的访问请求路径图

sidecar注入

先开启sidecar注入

kubectl label namespace default istio-injection=enabled

部署bookinfo

kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml

成功后

-

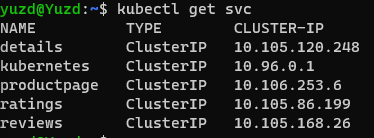

会创建svc:

-

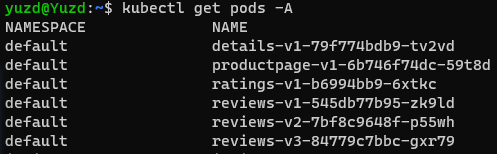

和创建pods:

sidecar注入是依赖k8s的webhook功能来实现的,在创建pod的Crd资源增加了istio的配置

pod资源被webhook修改成啥样了

先来分析下productpage

##################################################################################################

# Productpage services

##################################################################################################

apiVersion: v1

kind: Service

metadata:

name: productpage

labels:

app: productpage

service: productpage

spec:

ports:

- port: 9080

name: http

selector:

app: productpage

apiVersion: apps/v1

kind: Deployment

metadata:

name: productpage-v1

labels:

app: productpage

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: productpage

version: v1

template:

metadata:

labels:

app: productpage

version: v1

spec:

serviceAccountName: bookinfo-productpage

containers:

- name: productpage

image: docker.io/istio/examples-bookinfo-productpage-v1:1.16.2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 9080

volumeMounts:

- name: tmp

mountPath: /tmp

securityContext:

runAsUser: 1000

volumes:

- name: tmp

emptyDir: {}

---

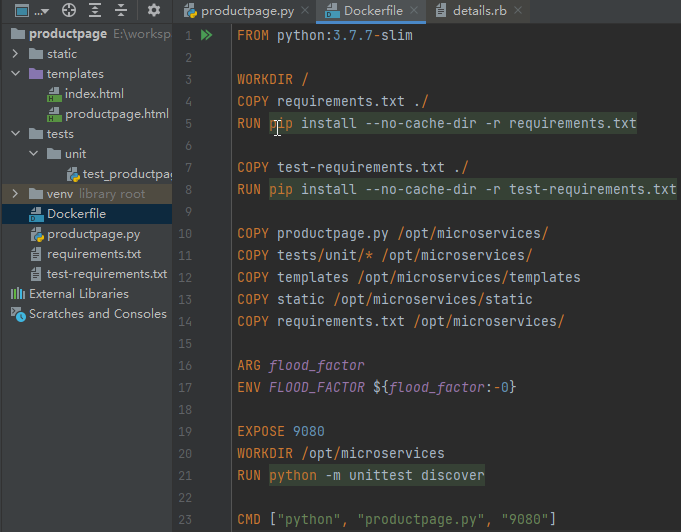

对应productpage镜像的DockerFile:

productpage是一个Flask写的python应用

通过命令查看sidecar注入后的productpage配置是怎么样的

kubectl describe pod -l app=productpage

Name: productpage-v1-6b746f74dc-59t8d

Namespace: default

Priority: 0

Node: minikube/192.168.49.2

Start Time: Sat, 25 Dec 2021 16:53:08 +0800

Labels: app=productpage

pod-template-hash=6b746f74dc

security.istio.io/tlsMode=istio

service.istio.io/canonical-name=productpage

service.istio.io/canonical-revision=v1

version=v1

Annotations: kubectl.kubernetes.io/default-container: productpage

kubectl.kubernetes.io/default-logs-container: productpage

prometheus.io/path: /stats/prometheus

prometheus.io/port: 15020

prometheus.io/scrape: true

sidecar.istio.io/status:

{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-data","istio-podinfo","istio-token","istiod-...

Status: Running

IP: 172.17.0.7

IPs:

IP: 172.17.0.7

Controlled By: ReplicaSet/productpage-v1-6b746f74dc

Init Containers:

istio-init:

Container ID: docker://81d9c7297737675de742388e54de51d21598da8e6a63b81d293c69b848e92ba7

Image: docker.io/istio/proxyv2:1.12.1

Image ID: docker-pullable://istio/proxyv2@sha256:4704f04f399ae24d99e65170d1846dc83d7973f186656a03ba70d47bd1aba88f

Port: <none>

Host Port: <none>

Args:

istio-iptables

-p

15001

-z

15006

-u

1337

-m

REDIRECT

-i

*

-x

-b

*

-d

15090,15021,15020

State: Terminated

Reason: Completed

Exit Code: 0

Started: Sun, 26 Dec 2021 14:23:05 +0800

Finished: Sun, 26 Dec 2021 14:23:05 +0800

Ready: True

Restart Count: 1

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 10m

memory: 40Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-clsdq (ro)

Containers:

productpage:

Container ID: docker://421334a3a5262bdbb29158c423cce3464e19ac3267c7c6240ca5d89a1d38962a

Image: docker.io/istio/examples-bookinfo-productpage-v1:1.16.2

Image ID: docker-pullable://istio/examples-bookinfo-productpage-v1@sha256:63ac3b4fb6c3ba395f5d044b0e10bae513afb34b9b7d862b3a7c3de7e0686667

Port: 9080/TCP

Host Port: 0/TCP

State: Running

Started: Sun, 26 Dec 2021 14:23:11 +0800

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Sat, 25 Dec 2021 16:56:01 +0800

Finished: Sat, 25 Dec 2021 22:51:12 +0800

Ready: True

Restart Count: 1

Environment: <none>

Mounts:

/tmp from tmp (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-clsdq (ro)

istio-proxy:

Container ID: docker://c3d8a9d57e632112f86441fafad64eb73df3ea4f7317dbfb72152107c493866b

Image: docker.io/istio/proxyv2:1.12.1

Image ID: docker-pullable://istio/proxyv2@sha256:4704f04f399ae24d99e65170d1846dc83d7973f186656a03ba70d47bd1aba88f

Port: 15090/TCP

Host Port: 0/TCP

Args:

proxy

sidecar

--domain

$(POD_NAMESPACE).svc.cluster.local

--proxyLogLevel=warning

--proxyComponentLogLevel=misc:error

--log_output_level=default:info

--concurrency

2

State: Running

Started: Sun, 26 Dec 2021 14:23:13 +0800

Last State: Terminated

Reason: Completed

Exit Code: 0

Started: Sat, 25 Dec 2021 16:56:01 +0800

Finished: Sat, 25 Dec 2021 22:51:15 +0800

Ready: True

Restart Count: 1

Limits:

cpu: 2

memory: 1Gi

Requests:

cpu: 10m

memory: 40Mi

Readiness: http-get http://:15021/healthz/ready delay=1s timeout=3s period=2s #success=1 #failure=30

Environment:

JWT_POLICY: third-party-jwt

PILOT_CERT_PROVIDER: istiod

CA_ADDR: istiod.istio-system.svc:15012

POD_NAME: productpage-v1-6b746f74dc-59t8d (v1:metadata.name)

POD_NAMESPACE: default (v1:metadata.namespace)

INSTANCE_IP: (v1:status.podIP)

SERVICE_ACCOUNT: (v1:spec.serviceAccountName)

HOST_IP: (v1:status.hostIP)

PROXY_CONFIG: {}

ISTIO_META_POD_PORTS: [

{"containerPort":9080,"protocol":"TCP"}

]

ISTIO_META_APP_CONTAINERS: productpage

ISTIO_META_CLUSTER_ID: Kubernetes

ISTIO_META_INTERCEPTION_MODE: REDIRECT

ISTIO_META_WORKLOAD_NAME: productpage-v1

ISTIO_META_OWNER: kubernetes://apis/apps/v1/namespaces/default/deployments/productpage-v1

ISTIO_META_MESH_ID: cluster.local

TRUST_DOMAIN: cluster.local

Mounts:

/etc/istio/pod from istio-podinfo (rw)

/etc/istio/proxy from istio-envoy (rw)

/var/lib/istio/data from istio-data (rw)

/var/run/secrets/istio from istiod-ca-cert (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-clsdq (ro)

/var/run/secrets/tokens from istio-token (rw)

省略

可以看出来多了2个东西的配置

-

istio-init -

istio-proxy

istio-init

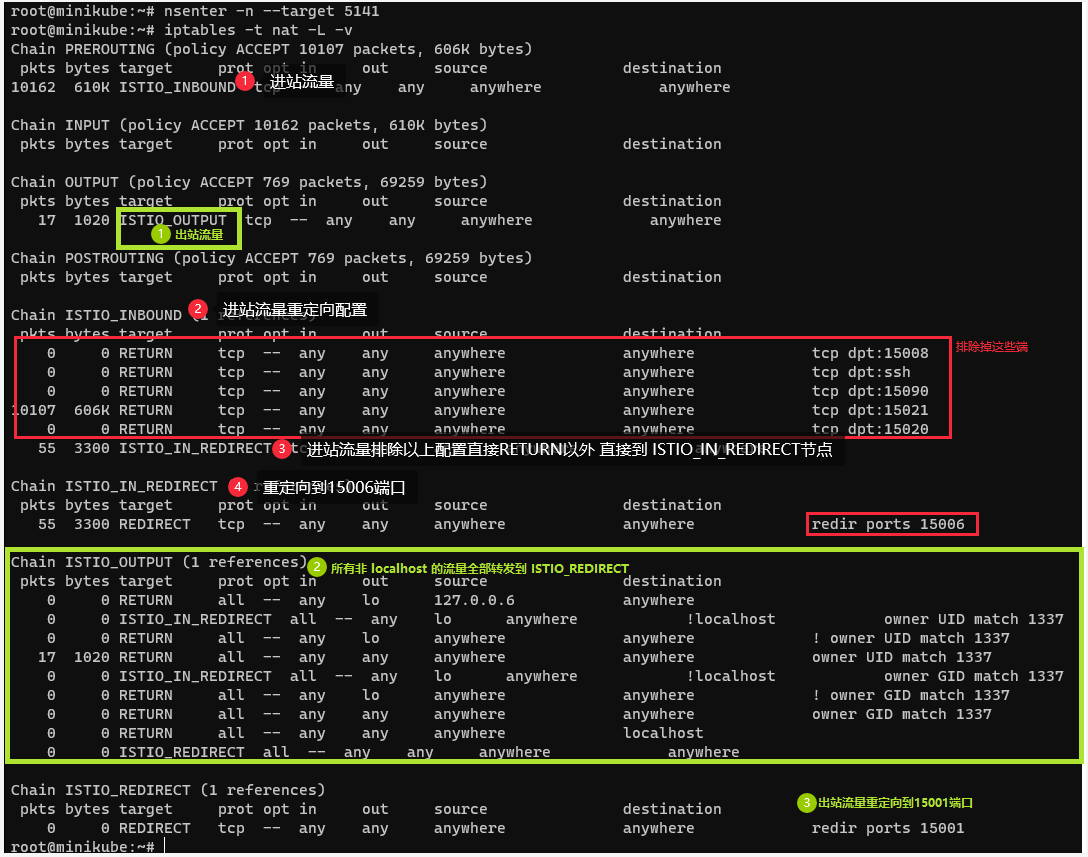

init容器 给pod配置iptables 让流量进入pod和出pod都重定向sidecar指定端口

用的镜像:docker.io/istio/proxyv2:1.12.1

新版本的istio已经用go语言重写了,以前老版本是一个sh脚本来配置iptables的

看老本也许更容易明白 https://github.com/istio/istio/blob/0.5.0/tools/deb/istio-iptables.sh

启动参数:

istio-iptables

-p 15001 // 出站流量都重定向到此端口

-z 15006 // 进站流量都重定向到此端口

-u 1337

-m REDIRECT

-i * -x "" -b * // 匹配全部的IP地址范围和端口列表

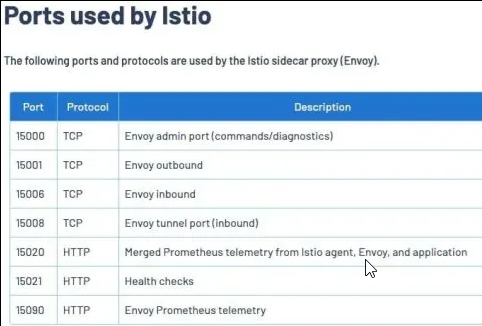

-d 15090,15021,15020 //这些内部用的端口排除不拦截(下表)

istio-proxy

启动sidecar

用的镜像:docker.io/istio/proxyv2:1.12.1

proxy

sidecar

--domain

$(POD_NAMESPACE).svc.cluster.local

--proxyLogLevel=warning

--proxyComponentLogLevel=misc:error

--log_output_level=default:info

--concurrency

查看iptales是怎么配置的

//进入minikube

minikube ssh

//切换为 root 用户,minikube 默认用户为 docker

sudo -i

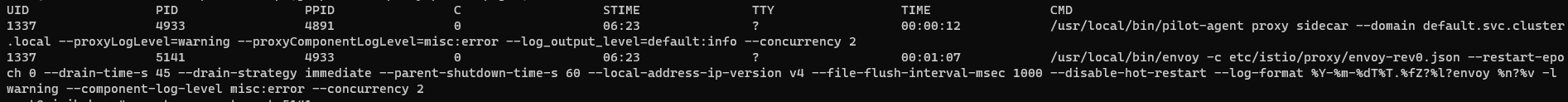

//拿到这个istio_proxy的启动的进程(一个是pilot-agent 一个是envoy)

docker top `docker ps|grep "istio-proxy_productpage"|cut -d " " -f1`

//进入上条命令打印的PID(随意哪个)

nsenter -n --target 5141

被istio注入后pod下参与的容器如下:

-

k8s_infra(也叫pause容器 把它理解为pod的底) -

istio_init(配置IpTables) -

app(应用容器) -

istio_proxy (启动pilot-agent和envoy如下图)

我理解的启动顺序也是按照从上到下的顺序,因为同一个pod下想要网络栈共享,必须得有一个底容器来兜底,其他容器指定-net=兜底容器id加入网络栈,所以我认为infra是最先启动才对

到底是init容器先启动,还是infra先启动,这块我没有找到相关资料,如果我理解有误望大佬教育!

总结

istio的sidecar注入,把pod的流量的出入用iptables的方式拦截了到了Envoy,

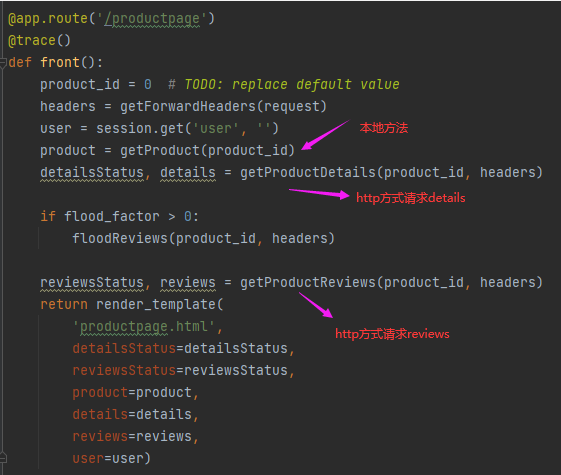

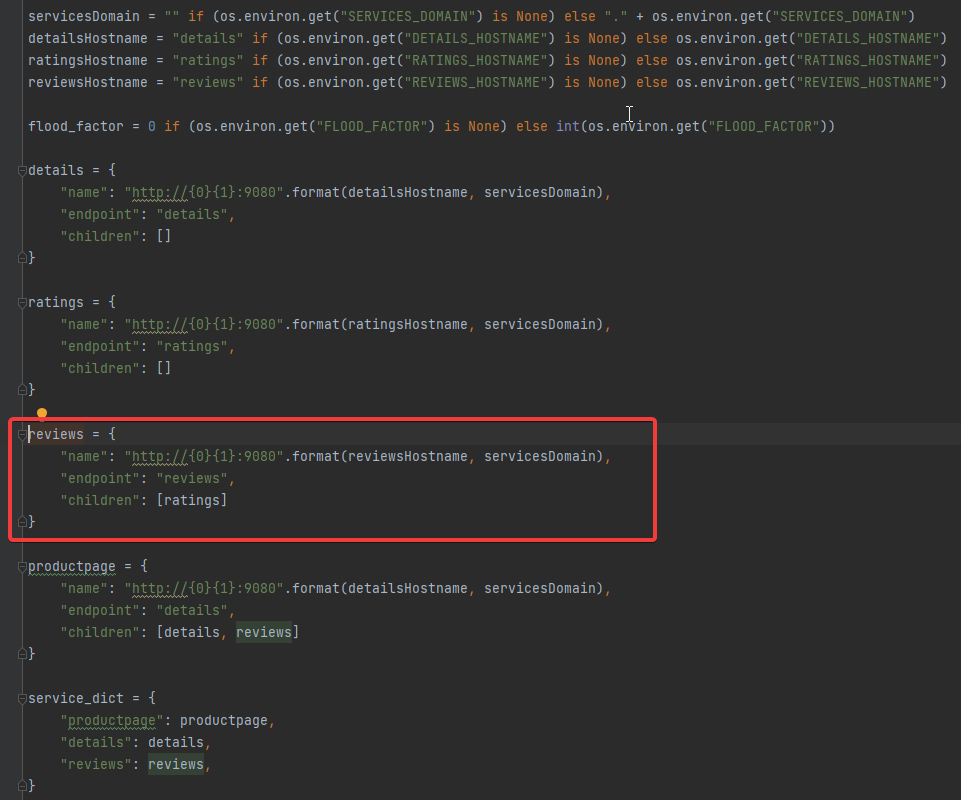

回到productpage的代码

//product_id默认为0

def getProductReviews(product_id, headers):

# Do not remove. Bug introduced explicitly for illustration in fault injection task

# TODO: Figure out how to achieve the same effect using Envoy retries/timeouts

for _ in range(2):

try:

url = reviews['name'] + "/" + reviews['endpoint'] + "/" + str(product_id)

res = requests.get(url, headers=headers, timeout=3.0)

except BaseException:

res = None

if res and res.status_code == 200:

return 200, res.json()

status = res.status_code if res is not None and res.status_code else 500

return status, {'error': 'Sorry, product reviews are currently unavailable for this book.'}

访问的reviews的url:http://reviews:9080/reviews/0

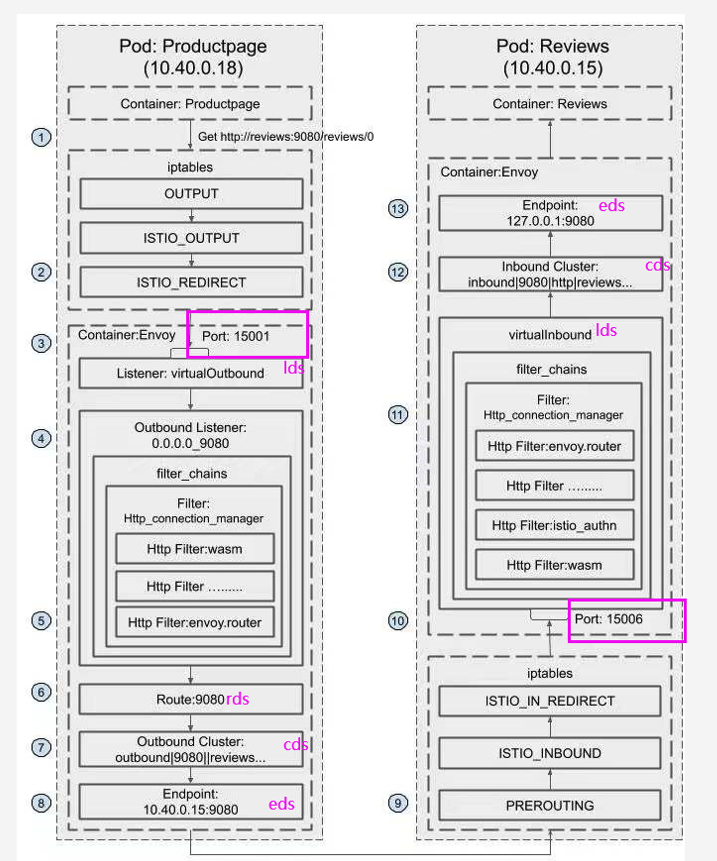

那么流量被sidecar拦截之后的流程如下图:

这里你肯定有一个疑问,

http://reviews:9080/reviews/0

这种域名式的访问是如何被envoy知道具体的请求地址的?是用kube-proxy吗? 下次再讲把!

感谢我的好朋友Raphael.Goh和istio大神dozer给予技术指导,让我慢慢走进内部去欣赏它的美!